Apple to Scan iPhones for Child Abuse Content

Apple will be scanning users’ phones for child abuse content, according to a recent announcement from the company.

Stopping the proliferation of child sexual abuse content is a noble goal, no doubt. But this is an obvious nightmare for Apple users living in one of the Five Eyes countries or any other police state.

Matthew Green, a highly respected cryptography professor, covered the news on Twitter. “These tools will allow Apple to scan your iPhone photos for photos that match a specific perceptual hash, and report them to Apple servers if too many appear,” he wrote in one Tweet. “Initially I understand this will be used to perform client-side scanning for cloud-stored photos. Eventually, it could be a key ingredient in adding surveillance to encrypted messaging systems.”

Green's Twitter thread touches on some of the concerns with this system

“The way Apple is doing this launch, they’re going to start with non-E2E photos that people have already shared with the cloud. So it doesn’t “hurt” anyone’s privacy. But you have to ask why anyone would develop a system like this if scanning E2E photos weren’t the goal.”

Initially, I thought Green’s source had misled him about the scope of Apple’s new initiative. However, someone in the Twitter thread posted a link to Apple’s announcement. It is real. Here is the relevant portion of the announcement:

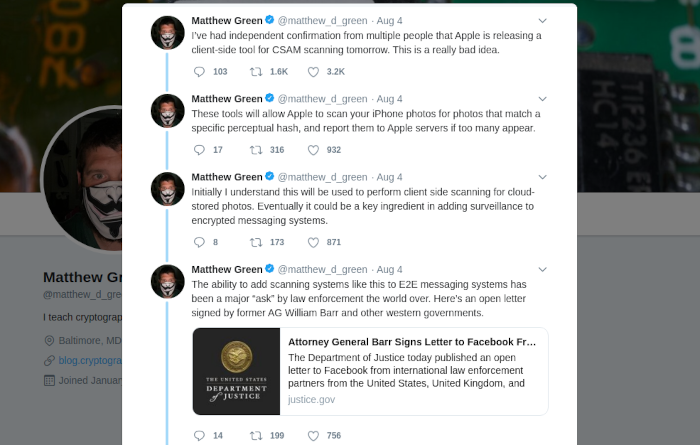

Another important concern is the spread of Child Sexual Abuse Material (CSAM) online. CSAM refers to content that depicts sexually explicit activities involving a child.

To help address this, new technology in iOS and iPadOS will allow Apple to detect known CSAM images stored in iCloud Photos. This will enable Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC). NCMEC acts as a comprehensive reporting center for CSAM and works in collaboration with law enforcement agencies across the United States.

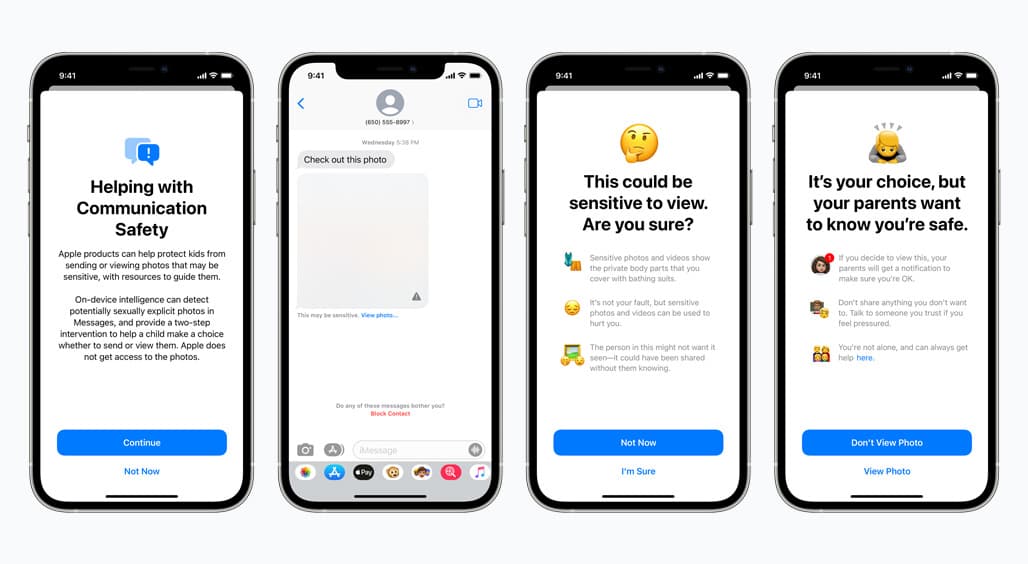

Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices.

Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.

Using another technology called threshold secret sharing, the system ensures the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content. The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account.

Only when the threshold is exceeded does the cryptographic technology allow Apple to interpret the contents of the safety vouchers associated with the matching CSAM images. Apple then manually reviews each report to confirm there is a match, disables the user’s account, and sends a report to NCMEC. If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated.

This innovative new technology allows Apple to provide valuable and actionable information to NCMEC and law enforcement regarding the proliferation of known CSAM. And it does so while providing significant privacy benefits over existing techniques since Apple only learns about users’ photos if they have a collection of known CSAM in their iCloud Photos account. Even in these cases, Apple only learns about images that match known CSAM.\

Apple will use machine learning to analyze image attachments and determine if a photo is sexually explicit.

Overall, the changes to Apple’s operating systems seem fairly typical; many social media platforms and cloud storage services implement a similar method of matching the hashes of user content against hashes of known child sexual abuse content provided by NCMEC and other sources. As far as I know, Apple is the first company to implement this type of system in an operating system. The difference between Dropbox scanning content uploaded by users and Apple scanning images on customer devices seems fairly significant.

My concern is roughly the same as the one voiced by Green although his position involves hash collision and mine involves government intervention:

“Imagine someone sends you a perfectly harmless political media file that you share with a friend. But that file shares a hash with some known child porn file?”

Hash collision seems like a valid concern. But I think authoritarian governments are much more likely to arbitrarily force hashes into the database that have nothing to do with child abuse content. One example that seems likely is content shared by political dissidents. Similarly, feds could try to match the hashes of certain pictures of drugs posted on the internet with content on iPhones in an attempt to identify drug dealers or users. And Apple is not the defender of user data in the way they want you to think.

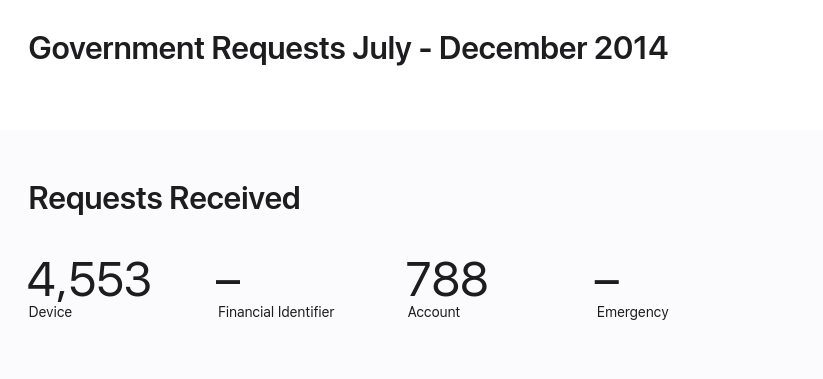

Apple has shown its willingness to bend for the FBI. This might come as a surprise to some readers due to the reputation Apple has cultivated after publicly denying the FBI access to a locked iPhone used by a suspect in the 2015 San Bernardino terror attack. The FBI admitted they did not need Apple to help them access the iPhone in question and criticized Apple’s position as a decision based on “concern for its business model and brand marketing strategy.”

Apple worked with law enforcement across the globe even before this high-profile event. It is possible the FBI’s 2015 request for GovOS was the first of its kind though.

Apple cooperated with law enforcment to the extent required by law even before the 2015 incident.

Apple also dropped their plan for encrypted iCloud backups after the FBI complained.

Apple rejected the FBI’s demands in 2015 and made good points in their court filings and public letters. Their counter-arguments included essentially everything that I and likely many readers agree with, including the dangers of such a precedent:

The implications of the government’s demands are chilling. If the government can use the All Writs Act to make it easier to unlock your iPhone, it would have the power to reach into anyone’s device to capture their data. The government could extend this breach of privacy and demand that Apple build surveillance software to intercept your messages, access your health records or financial data, track your location, or even access your phone’s microphone or camera without your knowledge.

It would seem as if the same argument used by Tim Cook in response to the FBI in 2015 applies to Apple and law enforcement in 2021.

I do not want to fear monger. But I think governments/law enforcment agencies take advantage of anything remotely exploitable. And this seems prone to exploitation. Of course, it is possible that Apple somehow implements these features in a way that prevents the government from eventually using the software to hunt other types of criminals.